By Punit Nandi

Computers or computing devices as we are aware today, are used for a large number of activities ranging from computing data, communicating with people who are thousands of miles away, entertainment, recording and safekeeping files, etc.

However, when the concept of computers or computing machines was first conceptualized, it was a serious business, it was majorly used for crunching large sets of numbers. It is quite funny to even recall now, given the current technological scenario, those same calculations can be completed hundreds of times faster by a tiny computing device such as a smartphone. However, fascinating it looks like, it was never this easy, humans took more than a Quadricentennial (400 years) to make this transition and the facts surrounding the transition are truly amazing.

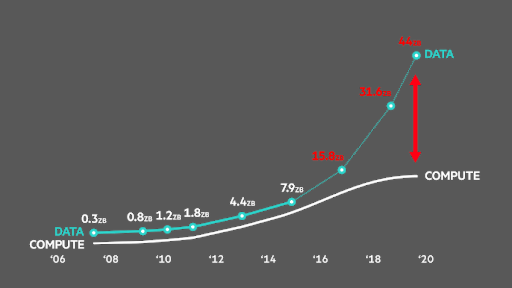

Another interesting factor surrounding computing evolution is data generation. It is mind-boggling to even think that we have created approximately 90% of data in the world today within the last two years alone.

Data curve from IDC/EMC Digital Universe reports 2008-2017 Credits: Weforum.org

In this blog, we will go on a journey through the world of computing and understand how computing started in the first place and the accomplishments we have made since. We will also dedicate a small section of our blog to the evolution of data generation.

This blog is divided into the following categories:

- The Early Computing age

- A Milestone for Data Storage

- The Beginning of Telecommunication and Computers

- Modern Computers and Programming

- Personal Computer Revolution

- Artificial Intelligence, Machine Learning, and Data Science

- Internet, Data Explosion and Financial Markets

The Early Computing Age

It goes back to the mid-1200s when philosopher “Ramon Llull” had thought experiments to create logical machines that could produce new knowledge in a very systematic and simple way. Given the scarcity of technological resources, it was not possible to create such a machine at that time. However, it gave way to a new style of thinking about computing logical operators and creating meaningful conclusions, thereby adding value. The work of Ramon Llull had a major influence on the work of Gottfried Leibniz, a German mathematician, who in 1671, designed a machine that could do multiplication by consecutive addition and executed division of numbers by continuous subtraction (with decimals), this was known as the “Step Reckoner”. Leibniz was always a believer in using computing machines and stated that smart people should not waste time in calculation.

Step Reckoner (Wikipedia.com)

Interestingly, about three decades before Leibniz’s invention, in a nearby land to Germany, French mathematician, Blaise Pascal invented the Pascaline. Pascaline, which was invented in 1642-44 was a primary calculator or totalizer and was used to solely do addition and subtraction by manipulating its dials while entering numbers. Below is a picture of the same :

Pascaline (Wikipedia.com)

Nearly 10 years later from the time Pascaline was introduced, Samuel Morland, influenced by the work of Pascal invented two calculators that had eight dials that could be moved by a stylus. One of the models that survived, could add up to one million numbers in decimals.

While the 1600s were incredible for the computing industry, the 1700s had comparatively very few pioneering inventions. By the beginning of 1800, precisely, in 1804–05, the Jacquard system was developed by Joseph Jacquard. His system used interchangeable punched cards which would control the weaving of the cloth and could design any pattern. These punched cards were later used by great inventors like Charles Babbage and Herman Hollerith for pioneering computational machines. Truly speaking, even if the Jacquard system was not directly connected to computing numbers, its computing architecture was a great addition to computing engines that were designed later.

Around 20 years later, the first computing device was built by Charles Babbage called the “Difference Engine”. He announced its invention in the year 1822 and built it over 1822-1830. It was built as an advanced upgrade when compared to a simple calculator. It had the capability to do a range of calculations on multiple variables for computing complicated numbers. It worked on the principle of “n + 1” and could also be reset once the task is completed. The Difference Engine also had a temporary data storage system for additional advanced processes and had metal pointers to mark its output, which used to be later printed on a printing plate. It was a logically structured computational machine, which has had a great influence on modern computing.

The Difference Engine (Credits: Wikipedia.com)

Interestingly, during the same period, Ada Lovelace, a young and brilliant mathematician met Charles Babbage at a party and the duo started to work on the “Analytical Engine“. She is also credited with being “the first programmer in the world“, a term which is still being hotly debated across circles. Her first program revolved around the calculation of “Bernoulli numbers”, which in itself is credited with being one of mathematics' oldest and interesting problems. The analytical engine had a critical attribute to place numbers and instructions in temporary storage and compute these numbers whenever required. This was accomplished by ordering proper instructions and data in its reader. The idea of storing numbers for temporary use is still very prevalent today and is seen being used in modern business calculators or even in the simplest of computing devices. Given all the events, it is evident that the thought of exploring the field of computing or computational field was picking up among the academic and research fraternity.

A Milestone for Data Storage

During the 1880s, Herman Hollerith, a young American inventor, invented the Tabulating Machine, particularly for calculating the US Census. This was an electromechanical computing device for storing and summarizing information in Punched Cards. It had a simple mechanism, the machine would read one punched card at a time and print the same in a chronological manner, which would then store the arithmetic values in an accumulator. With this, we can say the importance of data storage was seen and advances in this space were made later. Modified versions of the Tabulating machine were used even recently in the mid- 1980s and can be seen around the globe to this day in voting ballots.

Tabulating Machine (Credits: Wikipedia.com)

The Beginning of Telecommunication and Computers

It was 1903, when Nikola Tesla (one of the greatest inventors of all time), was offered patent No. US725605 for his invention, “system of signalling” which mentions transmission of messages via wireless transmission and was later also implemented in the invention of Frequency-hopping spread spectrum systems (FHSS). FHSS uses a method to rapidly spread modified radio wave signals to different frequency channels in a way that will be known only to both the transmitter and the receiver of the signal. This technology is now used in several communication systems like Bluetooth, LAN, etc.

The mechanism that we currently use in FHSS technology was also mentioned partly by actress and inventor Hedy Lamarr in her patented invention “ Secret communication system”. It was developed for being used by the US Navy during World War 2 for jamming networks, however, it was never adopted by the US Navy for any purpose. Simply put, FHSS uses the technique to increase bandwidth using the functions of frequency and time. This is currently used to create secure connections, increasing range in communication channels and also to decrease noise and radio jamming.

The start of telecommunications can be rightly inferred by the invention of the frequency hopping spread spectrum system (FHSS).

The rapid development stage

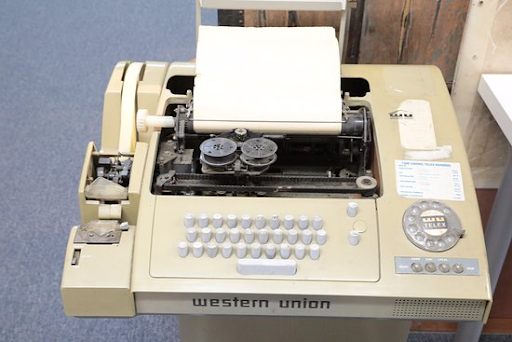

Telecommunications is one of the key drivers responsible for growth in modern computing. In the early 1930s, Telex messaging system or network was invented wherein two-way text messaging could be performed by connecting teleprinters to the same telephonic network using telephone grade connecting circuits. This was the first time two-way communication was performed with such great accuracy. One of the key advances of the telex messaging was the “Who are You” code. By this code, once the message has been sent, a reply to the message could also be sent to complete the communication session. This interactive feature of the telex messaging system brought immense popularity to the device.

Tele messaging system (Credit: Wikipedia.com)

Turing and AI

It was January of 1937, a young computer scientist Alan Turing published a research paper on ‘'On Computable Numbers, with an Application to the Decision Problem’, this paper had certain theories that have created the very base on which modern computer science is built today. He mentioned a theoretical model of a hypothetical computing machine that could determine results if given an input of variables. This was later known as the “ Turing Machine”. Now, there could be different types of Turing machines and a “Universal Machine” that could be created which can simulate all Turing machines. All of this was proposed by Turing himself. No wonder he is regarded as the “Father of Computer Science”.

Turing is also very widely regarded as the earliest pioneer in the field of Artificial Intelligence. His proposition of the “Turing Test”, which was a theoretical concept for passing a computer in the test if the computer could provide results that were human alike. The setup of the test was such that the first person would function as a questioner in a room and the second person and the computer would function as respondents in a different room. There would be a series of questions asked by the first person and the second person and the computer would respond to the said questions. If the first person is duped 30% of the time into thinking that the response was coming from a human rather than a computer, the computer is said to have passed the “Turing Test”. Although, it was widely regarded as a tough benchmark, in 2014, after 65 years of Turing test’s existence, “Eugene Goostman”, a chatbox, passed the test by duping the questioner 33% of the time in London. Read more here.

This test is regarded as one of the important theories which led to the development of AI and conceptualized the idea of a “thinking machine”, which is what most modern computer scientists have been working on!

Modern Computers and Programming

Even after all the progress, it was only late 1954 that a real market for business computers began to emerge with the launch of IBM 650, which had both data and addresses in two-state and a five-state component decimal numbers. For example “01-00100 ”. It could convert punched card alphabets and special characters into two decimals. This special feature of the machine attracted students especially and people from the business and scientific world. It sold around 2000 units, which is a huge number when compared with other computing machines available at that time. Even IBM once mentioned, “ No other electronic computer had been produced in such quantity.”.

In the year 1957, a numeric computation program “FORTRAN” was launched by researchers at IBM. It is also known as the “daddy of programming systems”. The logic of FORTRAN was later compiled into popular programming languages like C, C++, etc. When it was launched initially, it had 32 statements including DO, IF, etc. Interestingly, it used the “IF“ statement with probabilities weighted with the principles of the famous “Monte Carlo Simulation”, which was used to optimize the basic blocks in memory. This was a very advanced optimization technique even by current computing standards. Below is an example of FORTRAN 90 language :

PROGRAM Triangle IMPLICIT NONE REAL :: a, b, c, Area PRINT *, 'Welcome, please enter the& &lengths of the 3 sides.' READ *, a, b, c PRINT *, 'Triangle''s area: ', Area(a,b,c) END PROGRAM Triangle FUNCTION Area(x,y,z) IMPLICIT NONE REAL :: Area ! function type REAL, INTENT( IN ) :: x, y, z REAL :: theta, height theta = ACOS((x**2+y**2-z**2)/(2.0*x*y)) height = x*SIN(theta); Area = 0.5*y*height END FUNCTION Area

Source: Example of a Fortran 90 program

Due to its mathematical style, the input was also fed applying the same logic.

For the first time, a nonprogrammer could get an idea of what a program could exactly do and understood it at the same time, given the simplicity of the code. This was a powerful step in opening up computing devices to a much wider audience. Moreover, in 1958: Jack Kilby and Robert Noyce unveiled the integrated circuit. Although they worked on it separately, their inventions were very similar to one another. Kilby invented a hybrid IC (interconnected microchips ) and Noyce invented a full monolithic IC ( set of electronic circuitry on one small semiconductor chip). This was a huge turnaround moment for the computing industry as ICs were first of all very tiny in size and low cost at the same time. This meant that computing devices could be manufactured on a scale larger than before and at the most optimal price benefitting the producers.

The First Modem

In 1958, Bells labs (now known as AT&T) launched the Bell 101 dataphone. A dataphone is similar to a modern modem and data could now be transferred from one computer to another with the help of a telephone line at the speed of 110 bits per second. However, the Bell 103 modem was more successful than its predecessor and could transmit more amount of data with a speed of 300 bits per second. In fact, the Bell 103 modem is still in use today for non-commercial purposes.

BASIC Language

With all the inventions taking place in the field of computing, the programming fraternity was growing exponentially too and by 1964, Thomas E. Kurtz and John G. Kemeny launched the initial BASIC language. BASIC originally used numbers at the start of every line which informed the order of commands within the program. Lines were numbered as 30, 40, 50, etc. which could also enable extra directions to be placed between commands if required. Another element, "GOTO" statements loops back to previously instructed directions.

For instance, line 350 of a BASIC program will have an "if" clause that instructs the computing device to jump back to line 200 if a variable is more than 40. This instruction can be programmed like the following :

350 IF (N >40) THEN GOTO 200

BASIC Language was influenced by the likes of ALGOL58, which was conceptualized on the concept of block structure that creates a boundary by executing keywords like "begin" and "end" or something equivalent. Continuing the exciting trend of new programming languages, in 1969, developers at Bell Labs created UNIX, an operating system, that solved compatibility problems. The operating system was portable and could be transferred across multiple platforms which led to it being popular among government institutions and business entities.

The 70’s

By the early 1970s, the course of the computing industry had started to take a definitive direction. New inventions started revolutionizing the computing business. For instance, in 1970, Intel Corporation unveiled the Intel 1103 (a primary commercial Dynamic Access Memory (DRAM) chip). This made tiny capacitors and transistors store each bit of data in a memory cell enabling better transmission of data.

Next year in 1971, Alan Shugart with his team at IBM engineered the "floppy disk," permitting knowledge sharing among computers. The fascinating business strategy to enable consumers with technology to share knowledge among themselves was extremely well received and had started to shape future business decisions for the computing industry as one.

In 1973, “Ethernet” was launched by researchers of Xerox. It created a technology that could connect many computers within the long ranges. This was a turnaround period and a big bonanza for the internet revolution which was going to hit the mass population within a decade’s time. Ethernet networks are now bigger, faster, and much more advanced when compared to its initial version. Ethernet network is currently split among 4 types of speeds and it can run smoothly on each one of them. The range of the speed starts from 10 Mbps and limits to the higher 10,000 Mbps. Whenever a faster version is invented, it doesn't turn the older ones obsolete rather an Ethernet controller converts its speed to match the slowest connected device, which is useful while mixing two technologies together.

On the programming side of the world, C, which can be termed as a major programming language was developed in 1972 by Dennis Ritchie. C was nothing like the previous programming languages and can be termed as the transitional computing language which offered a newer and better way of writing programming languages. C made high use of pointers and was created to be quick and powerful However, because of its high-level language, C is hard to read. But it fixed most of the mistakes that were prevalent in the earlier invented “Pascal”. The syntax of the language was very simple and required low-access to memory. This is why it is still in use to this day and components of this language has been used in other major languages like C++, Java, etc.

Personal Computer Revolution

With the launch of HP 3000, HP 2640 series, Apple I/II/III, Apple Lisa, IBM S 34/36/38, the late ’70s and ’80s had a bunch of minicomputers launched and the consumers had a lot of options for buying minicomputers. Interestingly, Intel became the microchip supplier to IBM and created successful products like the Intel 80386, which was a 32-bit microprocessor chip and was several times faster than any of the Intel chips produced till that date. In fact, it had become a standard processor and was used by “Compaq” for one of its Personal Computing devices. The idea of a multi-process fast computing device was a key motivator for tech companies and eventually, the demand for the same increased among the consumers. Also, during the same period, languages like SQL, Mathematica, Octave, Matlab, and Python were released, which improved both the hardware and software ends of the computing industry. These languages are still very popular in the computing industry and are used heavily in highly computational calculations.

Information Age or Internet Era

The Internet grew popular in the 1990s and was the most engaging technological invention that man had ever seen. To evaluate the possibility of the Internet, a fund was established by the U.S. Advanced Research Projects Agency (ARPA), to research and develop a communication network between academia and government entities. 1969 was the year when ARPANET was launched and became the first and one of its kind network systems. ARPA’s desire to create a network that can link knowledge together did not come true until 1990 when Tim Berners-Lee (the man who is popularly known as the creator of the Internet) and others at the European Organization for Nuclear Research came up with a protocol with the crux based on hyper texting to make information distribution easier.

This was also the first time that the TCP/IP technology was being used which is very widely used today in internet networking. This led to the funding of the Internet by the private organizations following the government’s steps and by 1990s commercial organizations started interconnecting their systems. In 1991, this led to the creation of the World Wide Web (WWW), which is still one of the biggest revolutions in the computing industry. Its system network made user-created web pages possible. A team of programmers at the U.S. National Center for Supercomputing Applications invented the “browser” that made it easier to use the Internet, and Netscape Communications Corp founded in 1994 launched the “Mosaic Netscape 0.9” to commercialize the newly invented technology.

Another push to the Internet revolution came with the introduction of HTML, which is more than just a hypertext (word or words that contain a link to a website). The way it works is it displays text and images on a screen, determining how a computing device should react to a keypress or a mouse click. What popularized HTML was that it could be read using any type of computing device and was very economical at the same time. Web designers could create rich graphics on the pages that are small in size. Small file sizes are important on a network like the Internet as they are faster and easier to exchange information on computing devices separated by long distances.

The web was born with the invention of HTML. HTML made it simple and easy to create web sites that contained images, videos, and eventually sound. The small file allowed quick communication via the Internet. Within a very small period, everyone realized the potential of the web as a global communication tool and wanted to get hold of it as soon as possible. The web grew rapidly and as we know today, millions of people access the Internet daily for various purposes such as news, entertainment, shopping, etc. Later various versions of the HTML were launched which increased its efficiency and in some way controlled the behaviour of web pages to some extent.

Artificial Intelligence, Machine Learning, and Data Science

The term "Data Science" was first heard at the beginning of 1974 when Peter Naur published his paper “Concise Survey of Computer Methods”. It was later emphasized as a separate discipline of thought from computer science by William Cleveland in 2001 when he published his paper "Data Science: An Action Plan for Expanding the Technical Areas of the Field of Statistics.”

Applying the famous Parkinson's Law, data should expand until the disk space is filled. The “data-disk” interaction is an exponentially positive event and the chain of events leads to buying more and more disks with more and more data creation Funny to notice, it voids the economic principle of demand and supply. This chain of disk-data events is responsible for producing “big data“. Big data is widely known as the dataset which is extremely large and thus becomes complex and difficult to analyze when performed with the help of regular database management tools. It is used to conclude patterns, human behaviour and also to make predictions about the future after analyzing the past. Big data has since been a very popular technique that is used almost in each and every industry.

The requirement for computing big data in a more efficient way was fulfilled by the invention of cloud computing. It was created with the involvement of tech giants such as Amazon, Google, and Microsoft. One of the most important analyzing tools within cloud computing is Hadoop. Hadoop is used for computing the big data on the cloud platform.

The standard computing norm is to transfer data to an algorithm. The Hadoop computing style follows the opposite principle to the standard one. The data is so big, it cannot be put in an algorithm. Instead, it pushes many copies of the algorithm to the data.

It turns out that Hadoop is difficult to execute too. It requires advanced computing capabilities. With this, a market for the creation of analytic tools was also created which required more simple interfaces that could run on top of Hadoop.

Adding to the technological progression around the world, the computing industry started shifting towards the application of machine learning which focuses on letting algorithms learn by themselves from the available data for making judgments and predictions on unanalyzed data based on the information collected before. In general, machine learning is divided into three key parts :

- supervised machine learning algorithms

- unsupervised machine learning algorithms

- reinforcement machine learning algorithms

Internet, Data Explosion and Financial Markets

With the widespread usage of the internet, the financial markets became efficient too. Now anyone can trade in any of the trade exchanges with the likes of American Depository Receipts (ADR) subject to specific terms and conditions. Even arbitrage strategies surrounding the principle of difference between ADRs and the equivalent stock price trading in the exchange were built. The Internet brought with it, an explosion of data and by the mid-2000s, it democratized financial markets by providing tools and techniques for creating a level playing technique for all. However, this also led to an increase in volatility, given the increase in information dissemination. The Internet made firms, financial markets, and trading exchanges, supply and distribute as per the state of equilibrium, which had barriers like geographical boundaries and complex country to country regulations before internet penetration.

The Internet has brought all the firms together and enforced fair play in the financial markets while removing inequality.

Adding more efficiency to the markets, Algorithmic trading is the latest offering combining the field of statistics, computer science, and finance. Algorithmic trading began in the late 1980s and 1990s. It is often criticized at times for making the US market crash of 1987 worse than it would have been. Algorithmic trading grew popular during 2001 when IBM and HP unveiled their algorithmic strategies known as MGD and ZIP respectively, which successfully beat human traders and generated better alpha in the markets. Algorithmic trading works on the principles of algorithms or small instructions and thus gave way to High-Frequency trading which trades millions of trades at a very small period of time. This also increased liquidity in the markets and better pricing of assets.

Conclusion

The growth of computing or computational power was already predicted by Gordon Moore in 1965 when he laid down Moore’s law before the world. The law stated that the number of transistors per square inch on integrated circuits will double every year since the very first integrated circuits.

Moore predicted at that time that this pattern would still be seen in the future. However, in recent years, this trend has lost its pace, nevertheless, it still remains a remarkable growth story. Most experts, including Moore himself, expect Moore's Law to be valid until 2020-2025 and then start to lose its significance on the growth.

The journey of computing and computational devices over the last 4 to 5 centuries when compared with the period of more than 10000 years of human existence is quite phenomenal. It all started with the urge to execute calculations in a better way and here we are a few centuries later when large and high-speed computing devices do millions of calculations in seconds. The future holds more such exemplary inventions and advanced techniques to be discovered which shall make our lives easier and hassle-free. All in all, modern computing has made people’s lives easier and more comfortable. Technological advancements have provided opportunities for staying in touch with billions of people who may very well be in different parts of the world and still communicate with each other at great ease.

At last, I‘ll like to leave you with a wonderful data representation that compares the data the human brain can hold and what a computing device currently can.

Memory Scale (Credits: Visualcapitalist.com)

If you want to learn various aspects of Algorithmic trading then check out the Executive Programme in Algorithmic Trading (EPAT®). The course covers training modules like Statistics & Econometrics, Financial Computing & Technology, and Algorithmic & Quantitative Trading. EPAT equips you with the required skill sets to build a promising career in algorithmic trading. Enroll now!

Disclaimer: All data and information provided in this article are for informational purposes only. QuantInsti® makes no representations as to accuracy, completeness, currentness, suitability, or validity of any information in this article and will not be liable for any errors, omissions, or delays in this information or any losses, injuries, or damages arising from its display or use. All information is provided on an as-is basis.